반응형

import numpy as np

import time

from mydnn import *

np.set_printoptions(formatter={'float_kind':lambda x: "{0:6.3f}".format(x)})

NUM_PATTERN = 60000

NUM_X = 784

NUM_H = 16

NUM_M = 16

NUM_Y = 10

np.random.seed(0)

xs = np.random.uniform(0, 1, (NUM_PATTERN, 1, NUM_X))

yts = np.random.uniform(0, 1, (NUM_PATTERN, 1, NUM_Y))

WH = initialize_weight_He(NUM_X, NUM_H)

BH = np.zeros((1, NUM_H))

WM = initialize_weight_He(NUM_H, NUM_M)

BM = np.zeros((1, NUM_M))

WY = initialize_weight_Le(NUM_M, NUM_Y)

BY = np.zeros((1, NUM_Y))

lr = 0.01

shuffled_pattern = [pc for pc in range(0, NUM_PATTERN)]

begin = time.time()

for epoch in range(0,1):

np.random.shuffle(shuffled_pattern)

sumE = 0

for rc in range(0, NUM_PATTERN):

pc = shuffled_pattern[rc]

X = xs[pc]

YT = yts[pc]

H = relu_f(X@WH + BH)

M = relu_f(H@WM + BM)

Y = sigmoid_f(M@WY + BY)

e = calculate_MSE(Y, YT)

sumE += e

YE = sigmoid_b(Y - YT, Y)

ME = relu_b(YE@WY.T, M)

HE = relu_b(ME@WM.T, H)

WYE = M.T@YE

BYE = 1*YE

WME = H.T@ME

BME = 1*ME

WHE = X.T@HE

BHE = 1*HE

WY -= lr*WYE

BY -= lr*BYE

WM -= lr*WME

BM -= lr*BME

WH -= lr*WHE

BH -= lr*BHE

if rc%1000==999 :

print(".", end='', flush=True)

end = time.time()

time_taken = end - begin

print("\nTime taken (in seconds) = {}".format(time_taken))import tensorflow as tf

import numpy as np

xs = np.array ([-1.0, 0.0, 1.0, 2.0, 3.0, 4.0])

ys = np.array ([-2.0, 1.0, 4.0, 7.0, 10., 13.])

model = tf.keras.Sequential([

tf.keras.layers.InputLayer(input_shape = (1,)),

tf.keras.layers.Dense(1)

])

model.compile(optimizer='sgd', loss='mean_squared_error')

model.fit(xs, ys, epochs = 5)

p = model.predict([10.0])

print('p : ', p)# Approximate matrix function

import numpy as np

import time

import matplotlib.pyplot as plt

NUM_SAMPLES = 1000

np.random.seed(int(time.time()))

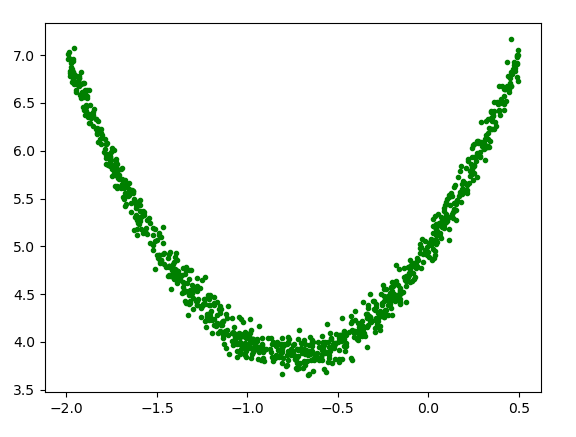

xs = np.random.uniform(-2, 0.5, NUM_SAMPLES)

np.random.shuffle(xs)

print(xs[:5])

ys = 2 * xs ** 2 + 3 * xs + 5

print(ys[:5])

plt.plot(xs, ys, 'b.')

plt.show()

"""

[-1.58954082 -0.43377667 -0.83189451 0.01967506 0.33270579]

[5.28465759 4.07499438 3.88841342 5.0597994 6.21950367]

"""# Training, separating experimental data

import numpy as np

import time

import matplotlib.pyplot as plt

NUM_SAMPLES = 1000

np.random.seed(int(time.time()))

xs = np.random.uniform(-2, 0.5, NUM_SAMPLES)

np.random.shuffle(xs)

print(xs[:5])

ys = 2 * xs ** 2 + 3 * xs + 5

print(ys[:5])

plt.plot(xs, ys, 'b.')

plt.show()

ys += 0.1 * np.random.randn(NUM_SAMPLES)

plt.plot(xs, ys, 'g.')

plt.show()

NUM_SPLIT = int(0.8 * NUM_SAMPLES)

x_train, x_test = np.split(xs, [NUM_SPLIT])

y_train, y_test = np.split(ys, [NUM_SPLIT])

plt.plot(x_train, y_train, 'b.', label ='train')

plt.plot(x_test, y_test, 'r.', label ='test')

plt.legend()

plt.show()

"""

import numpy as np

from matplotlib import pyplot as plt

from matplotlib import animation

fig = plt.figure()

ax = plt.axes(xlim=(0, 2), ylim=(-2, 2))

line, = ax.step([], [])

def init():

line.set_data([], [])

return line,

def animate(i):

x = np.linspace(0, 2, 10)

y = np.sin(2 * np.pi * (x - 0.01 * i))

line.set_data(x, y)

return line,

anim = animation.FuncAnimation(fig, animate, init_func=init,

frames=100, interval=20, blit=True)

plt.show()

"""

# Create animation to utilize matlab

import numpy as np

import matplotlib.pyplot as plt

from matplotlib.animation import FuncAnimation

NUM_SAMPLES = 1000

xs = np.random.uniform(-2, 0.5, NUM_SAMPLES)

np.random.shuffle(xs)

ys = 2*xs**2 + 3*xs + 5

def my_func(x):

return 2*x**2 + 3*x + 5

fig, ax = plt.subplots()

ax.set_xlim(xs.min(), xs.max())

ax.set_ylim(ys.min(), ys.max())

x, y = [], []

line, = plt.plot([], [], 'bo')

def update(frame):

x.append(frame)

y.append(my_func(frame))

line.set_data(x, y)

return line,

ani = FuncAnimation(fig, update, frames=xs)

plt.show()import numpy as np

import time

import matplotlib.pyplot as plt

NUM_SAMPLES = 1000

np.random.seed(int(time.time()))

xs = np.random.uniform(-2, 0.5, NUM_SAMPLES)

np.random.shuffle(xs)

print(xs[:5])

ys = 2 * xs ** 2 + 3 * xs + 5

print(ys[:5])

plt.plot(xs, ys, 'b.')

plt.show()

ys += 0.1 * np.random.randn(NUM_SAMPLES)

plt.plot(xs, ys, 'g.')

plt.show()

NUM_SPLIT = int(0.8 * NUM_SAMPLES)

x_train, x_test = np.split(xs, [NUM_SPLIT])

y_train, y_test = np.split(ys, [NUM_SPLIT])

plt.plot(x_train, y_train, 'b.', label ='train')

plt.plot(x_test, y_test, 'r.', label ='test')

plt.legend()

plt.show()

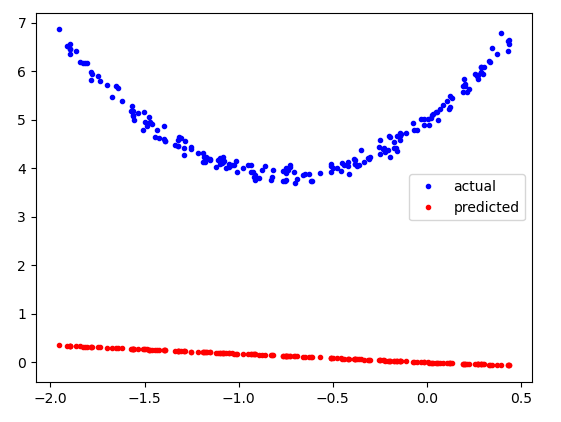

import tensorflow as tf

model_f = tf.keras.Sequential([

tf.keras.layers.InputLayer(input_shape = (1, )),

tf.keras.layers.Dense(16, activation ='relu'),

tf.keras.layers.Dense(16, activation ='relu'),

tf.keras.layers.Dense(1)

])

model_f.compile(optimizer = 'rmsprop', loss = 'mse')

p_test = model_f.predict(x_test)

plt.plot(x_test, y_test, 'b.', label = 'actual')

plt.plot(x_test, p_test, 'r.', label = 'predicted')

plt.legend()

plt.show()

# Training, separating experimental data

import numpy as np

import time

import matplotlib.pyplot as plt

NUM_SAMPLES = 1000

np.random.seed(int(time.time()))

xs = np.random.uniform(-2, 0.5, NUM_SAMPLES)

np.random.shuffle(xs)

print(xs[:5])

ys = 2 * xs ** 2 + 3 * xs + 5

print(ys[:5])

plt.plot(xs, ys, 'b.')

plt.show()

ys += 0.1 * np.random.randn(NUM_SAMPLES)

plt.plot(xs, ys, 'g.')

plt.show()

NUM_SPLIT = int(0.8 * NUM_SAMPLES)

x_train, x_test = np.split(xs, [NUM_SPLIT])

y_train, y_test = np.split(ys, [NUM_SPLIT])

plt.plot(x_train, y_train, 'b.', label ='train')

plt.plot(x_test, y_test, 'r.', label ='test')

plt.legend()

plt.show()

import tensorflow as tf

model_f = tf.keras.Sequential([

tf.keras.layers.InputLayer(input_shape = (1, )),

tf.keras.layers.Dense(16, activation ='relu'),

tf.keras.layers.Dense(16, activation ='relu'),

tf.keras.layers.Dense(1)

])

model_f.compile(optimizer = 'rmsprop', loss = 'mse')

p_test = model_f.predict(x_test)

plt.plot(x_test, y_test, 'b.', label = 'actual')

plt.plot(x_test, p_test, 'r.', label = 'predicted')

plt.legend()

plt.show()

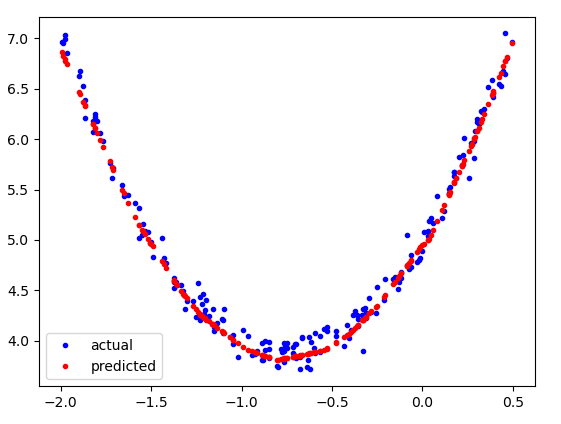

# neural learing !!

model_f.fit(x_train, y_train, epochs = 600)

p_test = model_f.predict(x_test)

plt.plot(x_test, y_test, 'b.', label='actual')

plt.plot(x_test, p_test, 'r.', label='predicted')

plt.legend()

plt.show()

import tensorflow as tf

mnist = tf.keras.datasets.mnist

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

x_train, x_test = x_train.reshape((60000, 784)), x_test.reshape((10000, 784))

model = tf.keras.Sequential([

tf.keras.layers.InputLayer(input_shape=(784,)),

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dense(10, activation='softmax')

])

model.compile(optimizer='adam',

loss ='sparse_categorical_crossentropy',

metrics=['accuracy'])

model.fit(x_train, y_train, epochs = 5)

model.evaluate(x_test, y_test)

"""

Epoch 1/5

1875/1875 [==============================] - 3s 1ms/step - loss: 0.2562 - accuracy: 0.9271

Epoch 2/5

1875/1875 [==============================] - 3s 1ms/step - loss: 0.1128 - accuracy: 0.9663

Epoch 3/5

1875/1875 [==============================] - 3s 1ms/step - loss: 0.0774 - accuracy: 0.9772

Epoch 4/5

1875/1875 [==============================] - 3s 1ms/step - loss: 0.0587 - accuracy: 0.9819

Epoch 5/5

1875/1875 [==============================] - 3s 1ms/step - loss: 0.0447 - accuracy: 0.9862

313/313 [==============================] - 1s 1ms/step - loss: 0.0741 - accuracy: 0.9774

"""import tensorflow as tf

mnist = tf.keras.datasets.mnist

(x_train, y_train),(x_test, y_test) = mnist.load_data()

print("x_train: %s, y_train: %s, x_test: %s, y_test:%s " %(

x_train.shape, y_train.shape, x_test.shape, y_test.shape))

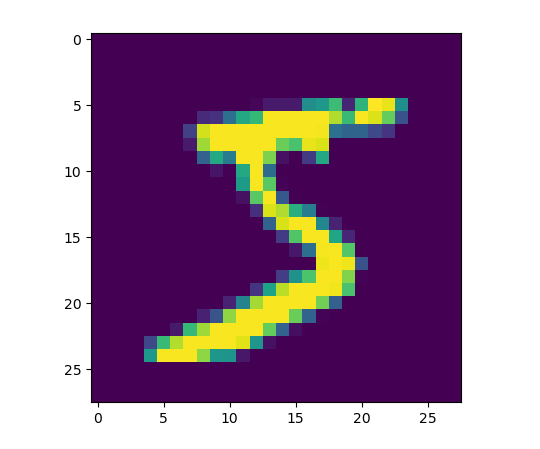

# x_train: (60000, 28, 28), y_train: (60000,), x_test: (10000, 28, 28), y_test:(10000,)import tensorflow as tf

mnist = tf.keras.datasets.mnist

(x_train, y_train),(x_test, y_test) = mnist.load_data()

print("x_train: %s, y_train: %s, x_test: %s, y_test:%s " %(

x_train.shape, y_train.shape, x_test.shape, y_test.shape))

import matplotlib.pyplot as plt

plt.figure()

plt.imshow(x_train[0])

plt.show()

import tensorflow as tf

mnist = tf.keras.datasets.mnist

(x_train, y_train),(x_test, y_test) = mnist.load_data()

print("x_train: %s, y_train: %s, x_test: %s, y_test:%s " %(

x_train.shape, y_train.shape, x_test.shape, y_test.shape))

import matplotlib.pyplot as plt

plt.figure()

plt.imshow(x_train[0])

plt.show()

for y in range(28):

for x in range(28):

print("%4s" %x_train[0][y][x], end ='')

print()import tensorflow as tf

mnist = tf.keras.datasets.mnist

(x_train, y_train),(x_test, y_test) = mnist.load_data()

print("x_train: %s, y_train: %s, x_test: %s, y_test:%s " %(

x_train.shape, y_train.shape, x_test.shape, y_test.shape))

import matplotlib.pyplot as plt

plt.figure()

plt.imshow(x_train[0])

plt.show()

for y in range(28):

for x in range(28):

print("%4s" %x_train[0][y][x], end ='')

print()

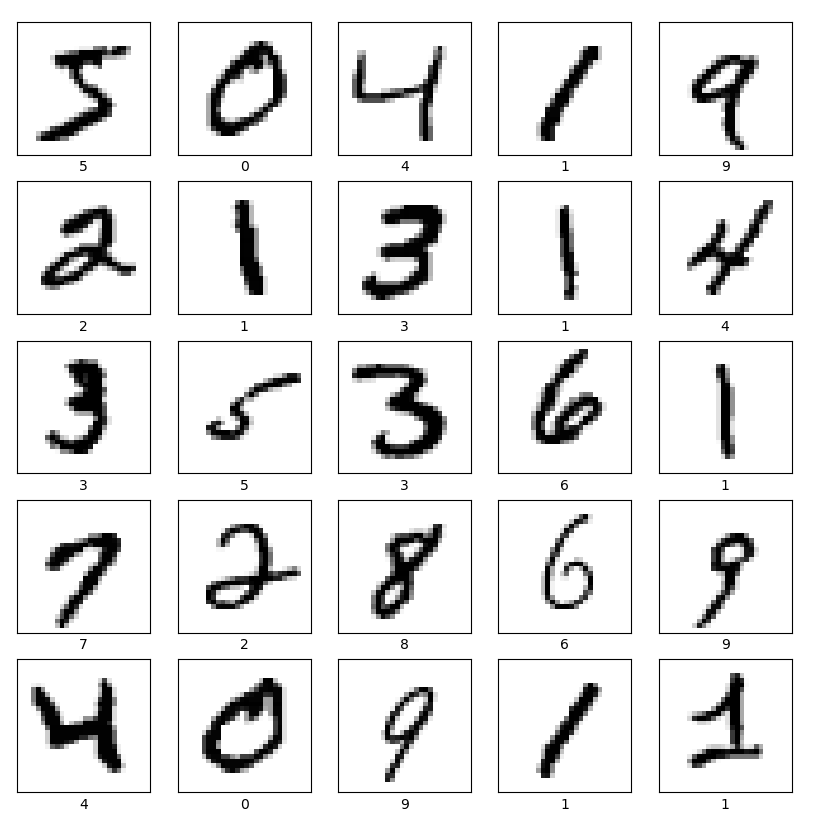

plt.figure(figsize=(10, 10))

for i in range(25):

plt.subplot(5,5,i+1)

plt.xticks([])

plt.yticks([])

plt.imshow(x_train[i], cmap=plt.cm.binary)

plt.xlabel(y_train[i])

plt.show()

x_train, x_test = x_train / 255.0, x_test / 255.0

x_train, x_test = x_train.reshape(60000, 784), x_test.reshape(10000, 784)

model = tf.keras.models.Sequential([

tf.keras.layers.InputLayer(input_shape=(784,)),

tf.keras.layers.Dense(128, activation ='relu'),

tf.keras.layers.Dense(10, activation ='softmax')

])

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

model.fix(x_train, y_train, epochs=5)

model.evaluate(x_test, y_test)

p_test = model.predict(x_test)

print('p_test[0] :', p_test[0])

import tensorflow as tf

mnist = tf.keras.datasets.mnist

(x_train, y_train),(x_test, y_test) = mnist.load_data()

print("x_train: %s, y_train: %s, x_test: %s, y_test:%s " %(

x_train.shape, y_train.shape, x_test.shape, y_test.shape))

import matplotlib.pyplot as plt

plt.figure()

plt.imshow(x_train[0])

plt.show()

for y in range(28):

for x in range(28):

print("%4s" %x_train[0][y][x], end ='')

print()

plt.figure(figsize=(10, 10))

for i in range(25):

plt.subplot(5,5,i+1)

plt.xticks([])

plt.yticks([])

plt.imshow(x_train[i], cmap=plt.cm.binary)

plt.xlabel(y_train[i])

plt.show()

x_train, x_test = x_train / 255.0, x_test / 255.0

x_train, x_test = x_train.reshape(60000, 784), x_test.reshape(10000, 784)

model = tf.keras.models.Sequential([

tf.keras.layers.InputLayer(input_shape=(784,)),

tf.keras.layers.Dense(128, activation ='relu'),

tf.keras.layers.Dense(10, activation ='softmax')

])

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

model.fit(x_train, y_train, epochs=5)

model.evaluate(x_test, y_test)

p_test = model.predict(x_test)

print('p_test[0] :', p_test[0])

import numpy as np

print('p_test[0] :', np.argmax(p_test[0]), 'y_test[0] :', y_test[0])

x_test = x_test.reshape(10000,28,28)

plt.figure()

plt.imshow(x_test[0])

plt.show()

plt.figure(figsize=(10,10))

for i in range(25):

plt.subplot(5, 5, i+1)

plt.xticks([])

plt.yticks([])

plt.imshow(x_test[i], cmap=plt.cm.binary)

plt.xlabel(np.argmax(p_test[i]))

plt.show()

cnt_wrong = 0

p_wrong = []

for i in range(10000):

if np.argmax(p_test[i]) != y_test[i]:

p_wrong.append(i)

cnt_wrong += 1

print('cnt_wrong :', cnt_wrong)

print('predicted wrong 10: ', p_wrong[:10])

plt.figure(figsize=(10, 10))

for i in range(25):

plt.subplot(5, 5, i+1)

plt.xticks([])

plt.yticks([])

plt.imshow(x_test[p_wrong[i]], cmap = plt.cm.binary)

plt.xlabel("%s : p%s y%s" %(

p_wrong[i], np.argmax(p_test[p_wrong[i]]), y_test[p_wrong[i]]))

plt.show()import numpy as np

import time

import tensorflow as tf

from mydnn import *

np.set_printoptions(formatter={'float_kind':lambda x: "{0:6.3f}".format(x)})

mnist = tf.keras.datasets.mnist

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

x_train = x_train.reshape((60000, 1, 784))

y_train = np.array(tf.one_hot(y_train, depth=10))

y_train = y_train.reshape((60000, 1, 10))

NUM_PATTERN = 60000

NUM_X = 784

NUM_H = 16

NUM_M = 16

NUM_Y = 10

np.random.seed(0)

xs = x_train

yts = y_train

WH = initialize_weight_He(NUM_X, NUM_H)

BH = np.zeros((1, NUM_H))

WM = initialize_weight_He(NUM_H, NUM_M)

BM = np.zeros((1, NUM_M))

WY = initialize_weight_Le(NUM_M, NUM_Y)

BY = np.zeros((1, NUM_Y))

lr = 0.01

shuffled_pattern = [pc for pc in range(0, NUM_PATTERN)]

begin = time.time()

for epoch in range(0,3):

np.random.shuffle(shuffled_pattern)

sumE = 0

hit, miss = 0, 0

for rc in range(0, NUM_PATTERN):

pc = shuffled_pattern[rc]

X = xs[pc]

YT = yts[pc]

H = relu_f(X@WH + BH)

M = relu_f(H@WM + BM)

Y = sigmoid_f(M@WY + BY)

if np.argmax(Y)==np.argmax(YT) :

hit+=1

else :

miss+=1

e = calculate_MSE(Y, YT)

sumE += e

YE = sigmoid_b(Y - YT, Y)

ME = relu_b(YE@WY.T, M)

HE = relu_b(ME@WM.T, H)

WYE = M.T@YE

BYE = 1*YE

WME = H.T@ME

BME = 1*ME

WHE = X.T@HE

BHE = 1*HE

WY -= lr*WYE

BY -= lr*BYE

WM -= lr*WME

BM -= lr*BME

WH -= lr*WHE

BH -= lr*BHE

if rc%10000==9999 :

print("epoch: %2d rc: %6d " %(epoch, rc+1), end='')

print("hit: %6d miss: %6d " %(hit, miss), end='')

print("loss: %f accuracy: %f" \

%(sumE/10000, hit/(hit+miss)))

sumE = 0

end = time.time()

time_taken = end - begin

print("\nTime taken (in seconds) = {}".format(time_taken))반응형

'Deep Learning' 카테고리의 다른 글

| Creating Number Recognition Artificial Intelligence (0) | 2022.09.05 |

|---|---|

| CNN (0) | 2022.09.05 |

| 7 Segment Display binary connetion table (0) | 2022.09.01 |

| Activation Function(sigmoid, relu, softmax) (0) | 2022.09.01 |

| Matrix and Calculation (0) | 2022.08.31 |